TB Connect installation

TB Connect is an optional component that serves as a bridge between your internal environment and TrustBuilder’s cloud environment. It provides a local gateway that allows TrustBuilder to interact with systems like LDAP, Active Directory, and other internal applications that don't support federation protocols (e.g., SAML or OpenID Connect). It enables secure access to internal systems without exposing them to the internet. By managing all connections locally, TB Connect ensures that your internal systems remain protected from external access.

TB Connect consists of multiple services running within Docker containers:

Orchestrator: Manages the authentication and authorization flows.

Gateway: Handles external requests and routes them to the appropriate services.

TrustBuilder Administration (TBA): Handles administrative functions.

MySQL database: Stores structured data such as user information, authorizations, and configurations.

Redis database: Manages user sessions, caches frequently accessed data, and facilitates asynchronous communication.

Deployment models

Docker deployment is the only officially supported and tested method for deploying TB Connect. Other deployment models (Public Cloud, Private Cloud, PaaS) are not tested or recommended at this time.

Requirements

Before starting the deployment, make sure that you meet the following requirements.

System Requirements

CPU: Minimum 4 cores

RAM: Minimum 8 GB

Network Access: Open the following ports:

443(HTTPS)6379(Redis)3306(MySQL - or custom port if changed)

Software Requirements

Install the following according to your operating system:

Access Requirements

Contact TrustBuilder Support to get access to:

docker.trustbuilder.io(TrustBuilder’s private Docker registry)

Deploy TB Connect

1. Download the necessary configuration files

Download the TB Connect Docker file which contains:

docker-compose.yml: defines the services and their configuration..env: contains environment variables for the deployment.

2. Configure the environment variables

TB Connect requires specific environment variables to be set in the .env file. These variables define the repository location, TB Connect version and authentication credentials. Edit the .env file to set the required variables.

✅ Currently, the supported version is the 10.5.1.

Here is a .env file template:

# TrustBuilder specific settings

TB_BASE_REPOSITORY=docker.trustbuilder.io

TB_CONNECT_VERSION=10.5.1

# Passwords

TB_ORCH_DB_PASSWORD=XXX

TB_ORCH_IDHUB_ADMIN_PASSWORD=XXX

TB_ORCH_IDHUB_ENC_PWD=XXX

TB_TBA_ENC_PWD=XXX

# MySQL

MYSQL_PASSWORD=XXX

MYSQL_ROOT_PASSWORD=XXX

# Microsoft SQL Server

MYSQL_SA_PASSWORD=XXX.env variable descriptions

TB_BASE_REPOSITORY: The Docker repository where service images are stored.TB_CONNECT_VERSION: The version of TB Connect to be deployed.TB_ORCH_DB_PASSWORD: The database password for the orchestrator.TB_ORCH_IDHUB_ADMIN_PASSWORD: The administrator password for IDHub in the orchestrator.TB_ORCH_ENC_PWD: The encryption password for the orchestrator.TB_TBA_ENC_PWD: The encryption password for the TBA service.

Before using TB Connect in production, replace the default passwords with strong and unique values.

3. Login to TrustBuilder private Docker registry

docker login docker.trustbuilder.ioContact TrustBuilder Support to get username and password.

4. (Linux only) Clean Docker environment

This step is required only if a previous deployment has been run.

It must be executed inside the folder where your docker-compose.yml is located (not at the root of the OS).

Use the following command to completely clean the Docker environment:

docker compose down -v; sudo rm -rf ./data;docker compose downstops and removes all containers and networks created bydocker compose.-vadditionally removes named volumes associated with the services.sudo rm -rf ./datadeletes a local directory named ./data. This folder might be used as a mounted volume in your docker-compose.yml to persist files or databases outside the container.

5. Start TB Connect with Docker Compose

Use the following command to start the services:

docker compose up -dIf after several minutes the services fail to start, run the same command without -d (docker compose up). This shows detailed logs for troubleshooting.

6. Verify that the services are running

Check active containers:

docker psThis command will list all the containers currently running, along with their status, ports, and other relevant details.

Once the services are up and running, the following components should be active:

docker-gateway-1docker-orchestrator-1docker-redis-1docker-tba-1docker-db-1

7. Access TrustBuilder Admin portal

Once TB Connect is running, you can access the TrustBuilder Admin portal locally.

Open your web browser and navigate to the following URL: https://localhost/idhub/admin

Log in with your admin credentials:

Username:

administratorPassword: defined in

.envunderTB_ORCH_IDHUB_ADMIN_PASSWORD

You should now have access to the Admin portal, where you can perform administrative tasks.

8. Manage license

You should now request and upload a license.

In TrustBuilder Admin portal, go to Certificates.

Find the certificate with alias:

idhub-encrypt.Click the binoculars icon to view certificate details.

Copy the certificate, including

-----BEGIN CERTIFICATE-----and-----END CERTIFICATE-----.Open a TrustBuilder Support ticket.

Request a license and paste the copied certificate into the ticket message.

TrustBuilder Support will send you a license file.

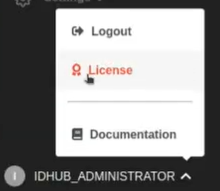

In TrustBuilder Admin portal, click on IDHUB_ADMINISTRATOR (bottom-left) then select License.

Click Select file, then upload the license file you received.

Your license should now be active.